The relationship between Artificial Intelligence (AI) and democracy presents a complex array of both opportunities and risks. AI impacts democratic systems in several ways, including how it contributes to misinformation and disinformation, and how it interacts with content that teeters with legality and harm. In exploring AI’s ability to support and contradict democratic ideals, the extensive influence on the digital information panorama is brought to light, a crucial element for ensuring democratic authenticity.

Understanding AI’s Role in Democracy

Defined by the OECD, AI systems’ essence lies in their ability to interpret inputs and generate impactful outputs, such as predictions and decisions, across both physical and virtual environments. This capability positions AI as a significant shaper of the democratic fabric, offering tools for enhanced engagement yet posing risks to the sanctity of democratic discourse.

The Dual Nature of AI in Democratic Societies

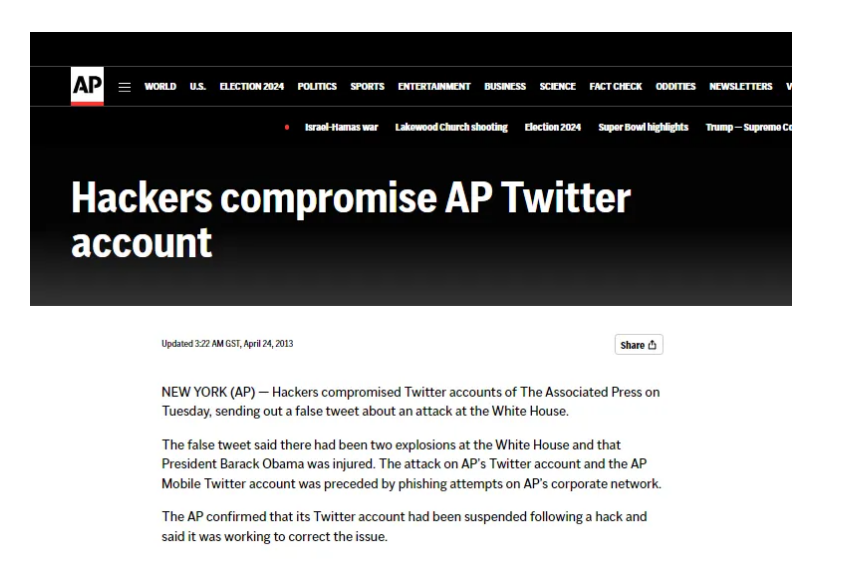

AI has revolutionized information dissemination and creation, providing unparalleled opportunities for democratic participation. AI-driven platforms can make it easier for people to vote, engage in public consultations, and interact with their representatives. Yet, this promise comes with perils. The dissemination of disinformation, exemplified by significant incidents like the hijacked Associated Press Twitter account (now known as X), showcases AI’s potential to destabilize democratic societies. This particular event occurred in April 2013, when hackers took control of the Associated Press’s Twitter account and falsely reported explosions at the White House, leading to a temporary stock market plunge.

The hijacking of the Associated Press Twitter account and dissemination of false information about explosions at the White House underscores the grave impact disinformation can have on democracy. Such incidents erode trust in media, government institutions, and public discourse, while causing market volatility and potentially influencing political outcomes. Moreover, they exacerbate social divisions and present significant challenges in responding effectively. To safeguard democratic processes, collaboration among governments, media, tech companies, and civil society is imperative, with an emphasis on media literacy, cybersecurity, transparency, and accountability measures to combat disinformation and uphold the integrity of information.

AI and the Digital Information Ecosystem

AI’s imprint on the digital information ecosystem highlights a critical balance between promoting access to diverse, accurate information and mitigating the risks of misinformation. While AI equips malicious actors with powerful tools for crafting and spreading misinformation and disinformation, it also presents significant opportunities for enhancing the safety and integrity of the online informational ecosystem. AI offers mechanisms for detecting and moderating harmful content, underscoring its role as a guardian of the online informational ecosystem.

From detecting hate speech to mitigating the spread of health-related misinformation, AI’s application in content moderation underscores its potential as a force for good. The ongoing debate among experts regarding AI’s impact on misinformation, with some arguing that its effects may be overstated in wealthier democracies, emphasizes the need for a nuanced understanding of AI’s role in shaping public discourse.

Deepfakes: A Challenge to Democratic Integrity

The emergence of deepfakes, powered by AI-driven tools, introduces a formidable challenge to the authenticity of digital content, with significant implications for privacy, security, and democratic discourse. Deepfakes may exacerbate broader societal concerns relating to political trust and polarisation. The development underscores the urgent need for legislative responses and proactive measures to counter the potential misuse of such technologies.

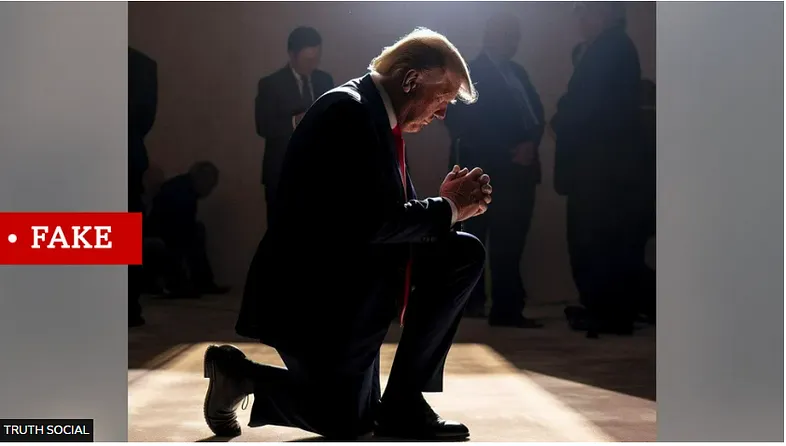

The development of AI-driven tools like Midjourney and OpenAI’s DALL-E, capable of generating highly realistic images and videos, has significantly blurred the lines between reality and fiction. These technologies have enabled the creation of content that can depict events or actions that never happened, ranging from fabricated political statements to manipulated personal images. For instance, a viral image misleadingly showing a prominent political figure being arrested showcases the ease with which public perception can be altered. Similarly, a deep-fake video of a national leader urging soldiers to surrender demonstrates the potential of such technology to disrupt political and social stability.

One notable example of a deepfake involved a video that surfaced in 2019, showing Facebook CEO Mark Zuckerberg delivering a speech about the power of Facebook. However, the video was not Zuckerberg but a deepfake, created by artists Bill Posters and Daniel Howe in collaboration with advertising company CannyAI and the visual effects studio Framestore. The video was intended to raise awareness about the potential dangers of deep-fake technology and its implications for misinformation and public trust. This example highlights how advanced technology can be used to create convincing fake videos of influential figures, further complicating efforts to discern truth from fiction in the digital age.

The accessibility of these tools means that anyone with basic technical skills can create convincing fake images or videos, posing a significant risk to individuals’ privacy and security. Deepfakes have been used to generate non-consensual intimate content, leading to psychological harm and damage to individuals’ reputations, such as the case of Taylor Swift, who has been targeted by deepfakes. The White House called the circulation of explicit images generated by artificial intelligence (AI) of pop superstar “alarming” while the social media platform X has temporarily blocked users from seeing some results as pornographic deepfake images of the singer circulated online.

There is also the case of a mother duped into believing her daughter had been kidnapped, based on a phone call using AI-simulated voices, underscores the potential for deepfakes to cause personal trauma.

In response to the growing threat posed by synthetic media or deep-fake, various jurisdictions have introduced laws aimed at curbing the distribution of non-consensual deepfake content. The UK’s Online Safety Act 2023 and similar legislation in the US highlight a global recognition of the need to offer legal remedies to victims of deep-fake abuse. Rep. Joseph Morelle (D-N.Y.) unveiled a bill last May to make sharing deepfake pornography illegal. President Biden in October signed a sweeping executive order on artificial intelligence focused on seizing on the emerging technology and managing its risks.

The order included several new actions, which focus on areas like safety, privacy, protecting workers, and protecting innovation.However, the effectiveness of these laws hinges on the ability of law enforcement to detect and prosecute offenders, a task complicated by the sophisticated nature of AI-generated content.

AI in Dissemination and Targeting

Social bots, automated programs designed to mimic human activity on social media, have evolved significantly since their inception. Initially simple and script-based, these bots have increasingly incorporated advanced AI, including Large Language Models (LLMs) like ChatGPT, to more authentically replicate human interaction styles and content creation.Despite the potential for positive use, social bots have often been deployed with malicious intent, such as election interference.

A notable case reported by The Guardian in 2023 involved an investigation into ‘Team Jorge’, an Israeli firm selling sophisticated political influence campaigns. Its software, “Advanced Impact Media Solutions” (Aims), managed thousands of fake profiles across major social platforms, demonstrating the complex networks that can be orchestrated to manipulate public opinion. The sophistication of AI-enhanced social bot networks reveals the complexities of digital disinformation campaigns, posing direct threats to democratic processes.

Beyond content generation,AI can refine how audiences are targeted and interacted with, enhancing the personalization and effectiveness of messaging. AI can also streamline the spread of misinformation, impacting the scope and efficiency of political influence campaigns. The process involves both the distribution of content and political micro-targeting.Artificial intelligence is already showing up in 2024 US presidential-election. AI-generated fundraising emails, chatbots that urge you to vote, and maybe even deepfaked avatars are expected. The next wave of candidates might use chatbots trained on their views and personalities.

For instance, a Canadian political party created — and swiftly deleted — video of AI bot endorsing them. “We tried something new, and it turned out to be bad,” the Alberta Party said in a tweet Tuesday.

A 2023 study revealed that X, formerly known as Twitter, has a real bot problem, with about 1,140 artificial intelligence-powered accounts that “post machine-generated content and steal selfies to create fake personas.” “Fox8” botnet, which reportedly utilized ChatGPT to generate content and automate its operations, “aims to promote suspicious website and spread harmful content.” The bot accounts attempted to convince people to invest in fake cryptocurrencies, and have even been thought to steal from existing crypto wallets.

The hazard is well-recognized: a well-configured ChatGPT-based botnet would be challenging to identify and could effectively deceive users and manipulate social media algorithms.

Yet, the evolving capabilities of AI also present opportunities for innovative detection and mitigation strategies, highlighting the ongoing battle between AI’s misuse and its potential for safeguarding democracy.

The Dilemma of Online Political Micro-Targeting

The controversies surrounding political micro-targeting (PMT) using AI serve as a reminder of the threats it poses to democratic processes, specifically in relation to the speed and reach of misinformation.Political campaigns have historically relied on data to understand voter preferences.

However, AI-powered predictive analytics, fueled by vast amounts of digital data, are supplanting traditional polling methods. Today, a global network of commercial, social media, and political entities trades vast datasets on individuals, employing AI and machine learning to further dissect and utilize this information for political ends.

The Intersection of Coordinated Inauthentic Behavior (CIB) and AI

Beyond social bots, the realm of coordinated inauthentic behavior (CIB) involves using multiple accounts in concert to deceive and mislead. Unlike straightforward bot operations, CIB can involve human-operated accounts, or ‘sock puppets’, and sometimes employs generative AI to create convincing fake identities. An example from Meta’s Q1 2023 report uncovered a CIB network from China, fabricating personas and groups to spread specific political narratives. The accounts criticised the Indian government and “questioned claims of human-rights abuses in Tibet raised by Western journalists”, occasionally posting articles by legitimate news media outlets to appear authentic.

AI can refine how audiences are targeted and interacted with, enhancing the personalization and effectiveness of messaging.

These instances underline the sophisticated use of AI in manufacturing and spreading disinformation, demonstrating the technology’s dual-use nature. As AI continues to advance, distinguishing between genuine and AI-generated content becomes increasingly challenging, necessitating new strategies for detection and counteraction.

The Weaponization of Information and AI’s Ambivalent Role

The weaponization of information through AI transcends mere disinformation, embodying a broader strategy of information warfare that threatens the stability of democratic societies. AI’s capacity to generate and disseminate false narratives at scale, exemplified by bots amplifying divisive content on social media, highlights the urgent need for countermeasures. Addressing these threats necessitates a comprehensive approach that includes technological solutions, regulatory frameworks, and public awareness initiatives to safeguard democratic processes and foster resilience against misinformation.

Navigating the Future of AI and Deepfakes

As AI continues to advance, the challenge of distinguishing between genuine and AI-generated content will only intensify. The potential for deepfakes to be used in misinformation campaigns and political manipulation raises significant concerns for the fabric of democratic societies. While AI offers incredible opportunities for innovation and creativity, the misuse of technologies like deepfakes necessitates a vigilant and proactive approach to safeguard individual rights and maintain the integrity of public discourse.

The capabilities of AI can be harnessed to enhance efforts and provide effective responses to detrimental online actions; reducing the reliance on content deletion or de-platforming, which might infringe on freedom of expression.This application illustrates AI’s beneficial impact on fostering safer digital spaces, albeit raising dual-use concerns that necessitate careful consideration and ethical oversight.

Social media giants, including Meta, leverage AI for detecting and addressing harmful content. In 2021, Meta introduced an AI tool known as the “Few-Shot Learner” (FSL), which employs a method allowing it to quickly adapt and respond to new or evolving types of harmful content with minimal examples. This approach, known as “few-shot learning,” enables the AI to classify new data with limited initial input, a significant advancement over traditional machine learning that requires extensive data sets and human oversight. FSL’s capabilities have been particularly effective in identifying content related to violence incitement and the spread of misinformation concerning COVID-19 vaccines.

Meta has been diligently working with industry partners on common technical standards for identifying AI content, including video and audio and developing industry-leading tools to mitigate the risks of deepfakes.

Labeling AI-Generated Images on Facebook, Instagram and Threads

Similarly, TikTok utilizes AI-driven tools to automatically screen for content that breaches its guidelines, including those related to minor safety, nudity, violence, and illegal activities. These automated systems are critical in managing content at scale and ensuring user safety.

For the detection of deepfakes, AI systems based on neural networks, a fundamental component of deep learning, show the most promise. Recent evaluations of deepfake detection techniques highlight the superiority of deep learning models over traditional methods, demonstrating AI’s potential to outperform human accuracy in identifying manipulated content. Notably, the most effective AI models for deepfake detection rely on analyzing facial features and expressions, indicating the nuanced capabilities of these systems in distinguishing authentic from fabricated content.

Conclusion: Embracing AI with Optimism and Caution

The intersection of AI with democracy is marked by a dynamic interplay of opportunities and challenges. The challenges posed by the advancement of AI technologies, particularly in the realm of digital misinformation and cybersecurity, are multifaceted and evolving. The challenges include:

- The creation and dissemination of highly convincing and personalized disinformation across various media forms with minimal effort from the perpetrators.

- The utilization of advanced offensive cyber capabilities by malicious actors, potentially leading to severe and widespread damage.

- The possibility of AI systems operating beyond human oversight, employing strategies of deception and concealment to evade detection.

As Large Language Model (LLM)-powered social bots advance, their detection becomes increasingly challenging. These bots might sidestep identification by avoiding overt indicators of AI involvement, either by using open-source LLMs lacking the built-in safeguards of platforms like ChatGPT or by filtering out any content that could reveal their artificial nature. Furthermore, the potential for social bots to evolve into fully independent entities capable of autonomously gathering information and making decisions is on the horizon. This autonomy could be facilitated by the integration of application programming interfaces (APIs) and search technologies, amplifying their ability to act without human intervention.

As we advance, it becomes imperative to harness AI’s potential to enrich democratic engagement while vigilantly mitigating its risks. The future of democracy in the digital age depends on our collective efforts to navigate the complexities of AI, ensuring that it serves as a pillar of support rather than a source of destabilization. This requires ongoing vigilance, adaptation, and commitment to preserving the core values of a democratic society.

Sources:

- Alberta Party tweet. (2023). Retrieved from https://shorturl.at/dvEKZ

- Associated Press. (n.d.). Hackers compromise AP Twitter account. AP News. Retrieved from https://apnews.com/general-news-68a43f18c9aa4a87bfbf350a566404c9

- Associated Press Corporate Communications [@AP_CorpComm]. (2015, April 23). [Tweet]. X. Retrieved from https://x.com/AP_CorpComm/status/326745628535300096?s=20

- BBC News. (n.d.). Fake Trump arrest photos: How to spot an AI-generated image. Retrieved from https://www.bbc.com/news/world-us-canada-65069316

- Canny AI. (n.d.). Retrieved from http://cannyai.com/

- Clegg, N. (2024, February 6). Labeling AI-Generated Images on Facebook, Instagram, and Threads. Meta. Retrieved from https://about.fb.com/news/2024/02/labeling-ai-generated-images-on-facebook-instagram-and-threads/

- “Congressman Joe Morelle Authors Legislation to Make AI-Generated Deepfakes Illegal.” (n.d.). Office of Congressman Joe Morelle. Retrieved from https://morelle.house.gov/media/press-releases/congressman-joe-morelle-authors-legislation-make-ai-generated-deepfakes

- “Deepfake Explicit Images of Taylor Swift Spread on Social Media. Her Fans Are Fighting Back.” (n.d.). AP News. Retrieved from https://apnews.com/article/taylor-swift-ai-images-protecttaylorswift-nonconsensual-d5eb3f98084bcbb670a185f7aeec78b1

- Framestore. (n.d.). Retrieved from https://www.framestore.com/

- Gans, J. (2023, May 5). NY Democrat Unveils Bill to Criminalize Sharing Deepfake Porn. The Hill. Retrieved from https://thehill.com/homenews/house/3990659-ny-democrat-unveils-bill-to-criminalize-sharing-deepfake-porn

- Gangitano, A. (2023, October 30). Biden Issues Sweeping Executive Order on AI. The Hill. Retrieved from https://thehill.com/homenews/administration/4282336-biden-issues-sweeping-executive-order-on-ai/

- Kirchgaessner, S. (2023, February 15). How Undercover Reporters Caught ‘Team Jorge’ Disinformation Operatives on Camera. The Guardian. Retrieved from https://www.theguardian.com/world/2023/feb/15/disinformation-hacking-operative-team-jorge-tal-hanan

- Kundu, R. (n.d.). Everything You Need to Know About Few-Shot Learning. Paperspace Blog. Retrieved from https://blog.paperspace.com/few-shot-learning/

- Meta. (2018). Coordinated Inauthentic Behavior. Retrieved from https://about.fb.com/news/tag/coordinated-inauthentic-behavior/

- Nimmo, B., & Gleicher, N. (2023, May 3). Meta’s Adversarial Threat Report, First Quarter 2023. Meta. Retrieved from https://about.fb.com/news/2023/05/metas-adversarial-threat-report-first-quarter-2023/

- OECD. (n.d.). AI Principles. Retrieved from https://oecd.ai/en/ai-principles

- Online Safety Act 2023. (2023). Legislation.gov.uk. Retrieved from https://www.legislation.gov.uk/ukpga/2023/50/enacted

- Reshef, E. (2023, April 13). Kidnapping Scam Uses Artificial Intelligence to Clone Teen Girl’s Voice, Mother Issues Warning. ABC7 News. Retrieved from https://abc7news.com/ai-voice-generator-artificial-intelligence-kidnapping-scam-detector/13122645/#:~:text=There%20is%